What is Multi-Factor Authentication and How to Enable it for Enhanced Security

July 23, 2024

Don’t Wait – Fix Computer Issues Early to Prevent Bigger Problems

January 14, 2025Understanding and utilizing the specific privacy settings and opt-out options for each generative AI tool is crucial for protecting sensitive information and ensuring data privacy.

AI is a prevalent part of life nowadays, and many people are enthusiastic about using generative AI tools for all sort of purposes, ranging from personal to business, or event therapeutic, but they often overlook significant privacy concerns.

AI tools like OpenAI’s ChatGPT, Google’s Gemini, Microsoft Copilot, and Apple Intelligence have different privacy policies, and many users are unaware of how their data is used and stored.

Have you ever used ChatGPT to help write your resume or cover letter? In doing so, you may have unknowingly shared private information, raising concerns about how this data could be used or possibly misused. While these AI tools offer valuable assistance, it’s crucial to be aware of the potential privacy implications. Your personal details, professional history, and other sensitive information could be stored and processed by the AI, which might then be used for training models or other purposes beyond your control.

Read over privacy policies

Going over formal documentation can be tedious, but it is crucial for consumers to thoroughly read privacy policies to fully understand how their data is used, retained, and deleted. Privacy policies often contain detailed information about what data is collected, how it is used, who it is shared with, and how long it is stored.

If these critical questions are not easily answered within the privacy policy, it should raise a red flag. Lack of transparency can indicate that the company may not have robust privacy protections in place, or they may be intentionally vague to avoid disclosing potentially problematic data practices. In such cases, consumers should be cautious about using the service and consider looking for alternatives that offer clearer and more user-friendly privacy practices.

By taking the time to understand the privacy policies of the AI tools they use, consumers can make informed decisions about which tools to trust with their personal information.

Privacy policies:

Avoid entering sensitive data into AI models

The best way to protect your privacy is to avoid including sensitive information in your interactions with AI tools. In fact, Google itself has issued warnings against sharing personal information with AI. Many companies have implemented strict policies that limit the use of generative AI tools to safeguard proprietary information. Individuals should adopt a similar approach and refrain from using AI for any non-public or sensitive data.

For example, when you use AI to draft resumes, cover letters, or other documents that contain personal details, there is a risk that this information could be stored, processed, and potentially used for purposes beyond your control. The data you input might be used to train AI models, which could compromise your privacy.

While it is relatively straightforward to remove personal data from the web, untraining AI models that have already incorporated this data is a much more complex process. This issue is still under active research, and effective methods for fully removing personal data from AI models have yet to be established.

To mitigate these risks, always review the privacy policies and data handling practices of the AI tools you use. Look for features that allow you to opt out of data sharing and ensure that your sensitive information is not retained or used for model training. By taking these precautions, you can better protect your privacy while still benefiting from the capabilities of generative AI.

Be aware of privacy setting

The lack of universal opt-out options across different AI tools makes it crucial for consumers to understand each tool’s privacy settings. Each generative AI tool has its own privacy policies and may offer specific opt-out options.

For instance, Gemini allows users to set a retention period and delete certain data, among other activity controls. ChatGPT users can opt out of having their data used for model training.

For generative AI used for search, setting short data retention periods and deleting chats after use can help protect privacy. This practice reduces the risk of sensitive information being retained or used for model training.

Be aware of privacy setting

The lack of universal opt-out options across different AI tools makes it crucial for consumers to understand each tool’s privacy settings. Each generative AI tool has its own privacy policies and may offer specific opt-out options.

For instance, Gemini allows users to set a retention period and delete certain data, among other activity controls. ChatGPT users can opt out of having their data used for model training.

For generative AI used for search, setting short data retention periods and deleting chats after use can help protect privacy. This practice reduces the risk of sensitive information being retained or used for model training.

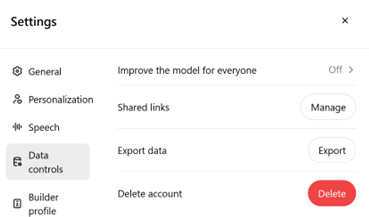

How to opt-out of having data used for model training by ChatGPT

- Navigate to the profile icon on the bottom-left of the page.

- Go to Settings.

- Select Data Controls under the Settings header.

- Disable the feature “Improve the model for everyone.”

While this is disabled, new conversations won’t be used to train ChatGPT’s models.

Ready to Streamline Your IT and Free Up Your Team's Time?

Contact IT Real Simple today for a free consultation and learn how our on-demand IT support can keep your business running smoothly.

P.S. See a full list of the IT services we offer.

Call to Action: Contact Us Today for a Free Consultation!